Real-Time ETL

(Extract, Transform, Load)

Stop delaying decisions waiting for slow data.

Merge, clean, and structure workflows in real time.

Brands you know trust Ververica

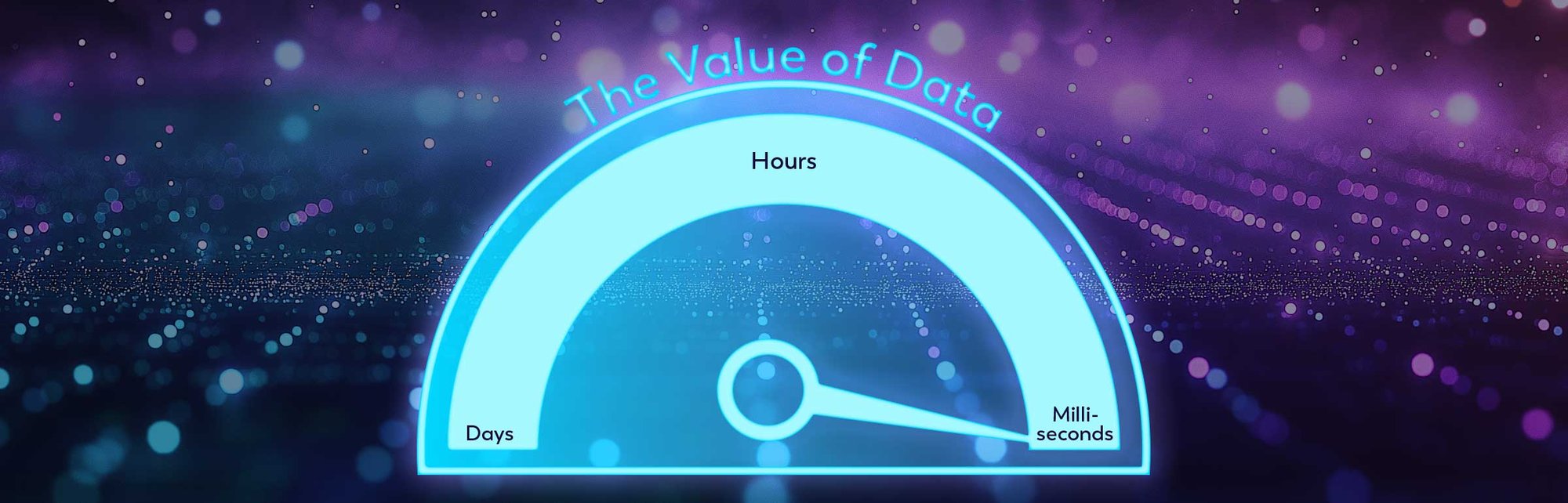

Say No To Slow Data.

Remove architectural complexities and deploy seamless data transformations to deliver accurate, real-time data insights at any scale. Ververica’s Unified Streaming Data Platform moves and processes data at millisecond latencies, so you can make decisions and take instant action on your data.

With Ververica, build seamless batch and streaming ETL workflows that drive real-time analytics, increase operational efficiency, streamline engineering workflows, reduce infrastructure costs, and integrate with modern AI/ML systems.

Business Challenges

Businesses need to process vast amounts of data efficiently to derive meaningful insights and stay competitive, and traditional ETL pipelines are incapable of keeping up with the real-time demands of modern enterprises.

Challenge

Solution

Traditional ETL systems process data in batches, incurring high latency, because the data is stored before it is made available for processing and action.

Ververica enables real-time ETL, enabling applications to ingest, transform, and act on data instantly without having to store it first.

Legacy ETL pipelines are often complex, with multiple layers of processing that incur high maintenance costs and inefficiencies.

Ververica's simplified event-driven architecture allows on-the-fly transformations, reducing dependencies on intermediate storage layers.

Many ETL solutions struggle to perform well and scale effortlessly with fluctuating data volumes and velocity.

Ververica’s cloud-native service dynamically scales based on workload demands, ensuring efficient resource utilization across multiple enviornments.

Data governance and security needs in ETL workflows are complex, yet essential.

Ververica's built-in data governance, data lineage, and security features comply with regulatory and compliance requirements.

Storing intermediate results for batch processing requires heavy infrastructure and adds significant storage costs.

Ververica enables continuous data transformations using intermediary results and local state storage without excessive overhead, reducing costs.

Traditional ETL tools constantly fail to recover efficiently from node failures, resulting in significant disruptions.

With built-in fault tolerance with stateful recovery, Ververica minimizes downtime and data loss.

Developing, maintaining, and modifying ETL pipelines in legacy systems requires extensive in-house effort and resources.

Ververica offers the ANSI SQL1 compliant streaming query engine to simplify ETL development, making it more accessible to data engineers and data analysts.

ETL tools were initially built to support batch workloads and now struggle to handle continuous data streams efficiently.

Ververica unifies batch and streaming computation within a single runtime, making it ideal for all types of event-driven applications.

Data governance and security needs in ETL workflows are complex, yet essential.

Ververica's built-in data governance, data lineage, and security features comply with regulatory requirements.

1ANSI SQL: American National Standards Institute Structured Query Language

Why Ververica?

Eliminate complex and outdated ETL architectures and accelerate data transformation while reducing operational overhead. With Ververica, build reliable, robust, secure ETL workflows that access, transform, and store data from any source in real-time, enforcing strong data governance, and enabling faster decisions and actions.

Features of Ververica’s Unified Streaming Data Platform

Powered by VERA

Armed with the powerful cloud-native engine, utilize low-latency ETL workflows to harness insights and take action on data at any volume and scale.

Change Data Capture (CDC)

Access operational data like never before. Build real-time ETL pipelines with incremental data updates to reduce latency, storage overhead, and system load, while ensuring consistency.

Data Lineage and Governance

Track data movement to ensure data transparency, accuracy, cleanliness, and compliance with regulatory and policy requirements.

Rich Connector Ecosystem

Easily connect multiple data sources including data lakes, lakehouses, data warehouses, and more in real time.

24/7 Observability & Monitoring

With real-time metrics, alerts, and logs, proactively monitor ETL performance and troubleshoot issues before they become problems.

Streamhouse

Data Lakes don't have to be slow. Utilize unlimited storage, ingestion, and compute power to run massive data queries cost effectively.

Key Benefits Using Ververica for ETL

From Medallion to Trophy

Traditional medallion architectures introduce inefficiencies, redundant storage, and higher costs. Ververica's architecture accelerates ETL with streaming SQL, CDC support, and automatic fault recovery. The result? Win at business with reduced infrastructure overhead and improved data freshness that supports faster, more cost-effective insights.

Data Architecture, Simplified

Update complex, multi-layered, and slow batch pipelines with continuous data processing. Still need batch processing for specific use cases? Ververica supports hybrid workloads, unifying ETL pipelines into seamless support for both real-time and historical data processing.

Living, Breathing Data

Ververica handles high-throughput event streams dynamically, optimizing resource use while automatically scaling to match any data volume and demand fluctuation. Whatever the workload, Ververica automatically tailors to fit.

Success Story

“Ververica helps us to bridge the gap between our old, big complicated monolithic systems and our modern systems... we need real time data, with fast processing capabilities, and Ververica provides us with (this, and) additional capabilities like monitoring our data flows which gives us resilience and recovery in case of any failures, which is a major advantage for us.”

-Sudha Ramiaj, Senior Technical Consultant, Uniper

Uniper is an international energy company that focuses on securing energy supply and accelerating the energy transition by generating power, trading energy, and providing energy solutions.

Other Ways to Use ETL

ETL is a foundational process for various data-driven applications.

Ververica’s Unified Streaming Data Platform empowers industries like financial services, e-commerce, gaming, healthcare, and technology to transform ETL processes into real-time analytics and actionable insights.

Ready to streamline your data pipelines with automated ETL?

Let’s talk

Ververica's Unified Streaming Data Platform helps organizations to create more value from their data, faster than ever. Generally, our customers are up and running in days and immediately start to see positive impact.

Once you submit this form, we will get in touch with you and arrange a follow-up call to demonstrate how our Platform can solve your particular use case.