6 things to consider when defining your Apache Flink cluster size

This is the first episode of a blog series providing some easy-to-digest best practices and advice on how to leverage the power of Apache Flink and make the best use of the different Flink features.

One of the frequently asked questions by the Apache Flink community revolves around how to plan and calculate a Flink cluster size (i.e. how to define the number of resources you will need to run a specific Flink job). Defining your cluster size will obviously depend on various factors such as the use case, the scale of your application, and your specific service-level-agreements (SLAs). Additional factors that will have an impact on your Flink cluster size include the type of checkpointing in your application (incremental versus full checkpoints), and whether your Flink job processing is continuous or bursty.

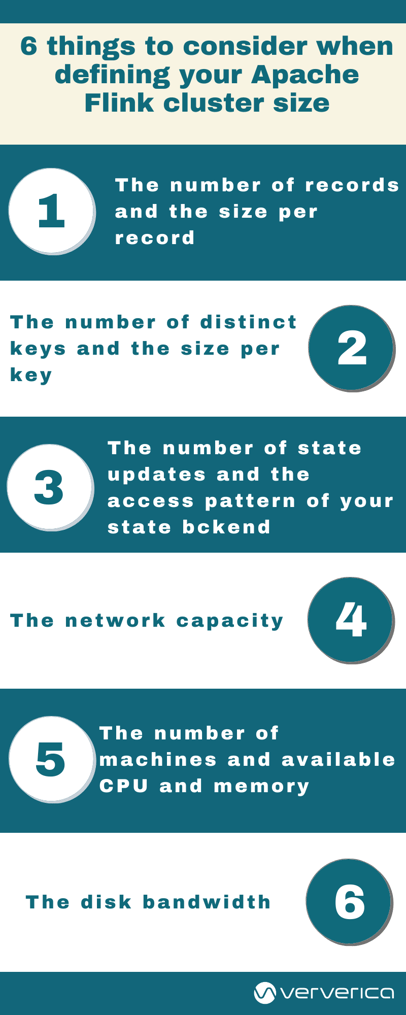

The following 6 aspects are, among others, some initial elements to consider when defining your Apache Flink cluster size:

1. The number of records and the size per record

One of the first things to consider when defining your cluster size is the number of expected records per second arriving in the streaming framework as well as the size of each record. Different record types will have different sizes which will ultimately impact the required resources for your Flink application to operate smoothly.

2. The number of distinct keys and the state size per key

Both the number of keys in your application and the size of the state per key will impact the resources that you will need to effectively run a Flink application and avoid any backpressure.

3. The number of state updates and the access patterns of your state backend

The third consideration before sizing a Flink cluster relates to the number of state updates. Various access patterns on your RocksDB, Google Cloud Datastore, or Java’s heap state backend might significantly impact the size of your cluster and the required resources for your Flink job.

4. The network capacity

Your network capacity will not only be impacted by the Flink application itself but also by external services you might be talking to such as Kafka or HDFS. Such external services might be causing additional traffic to your network. For instance, enabling replication might create additional traffic between your network’s message brokers.

5. The disk bandwidth

The disk bandwidth should be taken into consideration if your application relies on a disk-based state backend, like Rocks DB, or if you consider using Apache Kafka or HDFS.

6. The number of machines and their available CPU and memory

Last but not least, you will need to consider the number of the available machines in your cluster and their available CPU and memory before proceeding with the application deployment. That ultimately ensures that you have enough processing power before moving your application to production.

More case-specific aspects to include in your consideration relate to your own or your organization’s accepted SLAs (service-level agreements). For instance, consider the amount of downtime your organization might be willing to accept, the accepted latency, or maximum throughput as such SLAs will have an impact on the size of your Flink cluster.

All the factors above should give you a good indication of the number of resources required for your Flink job and will provide you a guideline for normal Flink job operations. You should always consider adding some buffers to be used for recovery catch-up or handling load spikes. For example, in a scenario where your Flink job fails, you will need additional resources for the system to recover and catch up from a Kafka topic or other messaging clients used.

If you want to explore more on this topic, you can visit one of our earlier blog posts to find out more detail. Our infographic provides a visual representation of the considerations discussed above that you can download and keep at hand when needed.

As always, we welcome any feedback or suggestions through our contact forms and through the Apache Flink mailing list.

You may also like

Meet the Flink Forward Program Committee: A Q&A with Lorenzo Affetti

Meet Lorenzo Affetti, a Program Committee member for Flink Forward 2025, ...

Outrun Fraudsters with Agentic AI and Ververica

Enhance fraud detection with agentic AI and Ververica's real-time stream ...

KartShoppe: Real-Time Feature Engineering With Ververica

Discover how KartShoppe leverages Ververica’s real-time feature engineeri...

Maximize Efficiency: How Ververica's BYOC Deployment Optimizes CAPEX and OPEX

Learn how Ververica's BYOC deployment leverages CAPEX and OPEX to optimiz...