How OpenSSL in Ververica Platform 2.1 improves your Flink performance

With Ververica Platform, we are always striving to provide our users the best Flink experience they can get. Our newest release, version 2.1, includes a very nice performance improvement that does not require any user changes in the Flink applications or cluster setup: using OpenSSL for encrypted communication rather than relying on Java’s implementation. In our benchmarks, we were able to achieve throughput improvements of 50% to 200%. In the following sections we discuss how SSL generally affects a Flink jobs' performance and how OpenSSL in Ververica Platform changes that . We also give an overview of the setup and the technical implementation.

Background

Flink’s network stack is built on top of Netty and is one of the core components that make up the flink-runtime module and sit at the heart of every Flink job. It connects individual work units (subtasks) from all TaskManagers and is where your streamed-in data flows. The network stack is crucial to the performance of your Flink job for both the throughput and latency you observe (for more information, please refer to the following blog posts on Flink’s network stack: A Deep-Dive into Flink's Network Stack, Flink Network Stack Vol. 2: Monitoring, Metrics, and that Backpressure Thing).

Apache Flink supports SSL as a standard protocol for securing all network communication within a cluster. Since Flink 1.9, the project has supported default encryption using Java's SSL implementation along with OpenSSL, which claims significant performance improvements.

Setup

Since release 2.1, Ververica Platform includes Flink 1.9 and 1.10 images that include a dynamically-linked netty-tcnative version that fits the container they are built with. When you enable SSL (with the push of a button as shown below; or a simple deployment flag for the REST API), we will automatically select Netty’s OpenSSL implementation for Flink 1.10 and ensure compatibility between this and the default Java SSL setup (for Flink 1.9, please refer to the instructions and requirements in our documentation).

Benchmarks

In order to see the magnitude of the actual performance gains (if any), we set up two different jobs that process data from artificial inputs and discard their computed results (to reduce the effects of any external systems). The first example leverages the Troubled Streaming Job from our Apache Flink Tuning & Troubleshooting training which we regularly offer at the Flink Forward conference. The second job performs a more complex SQL query which is joining a few tables to de-normalize dimensional data.

Troubled Streaming Job

This job reads from a fake Kafka source with a total of 8 partitions, 2 of which are idle. These (binary) events are then deserialized, grouped/keyed by location for a windowed aggregation, and put into one DiscardingSink for normal data and one for late data (see the job graph below).

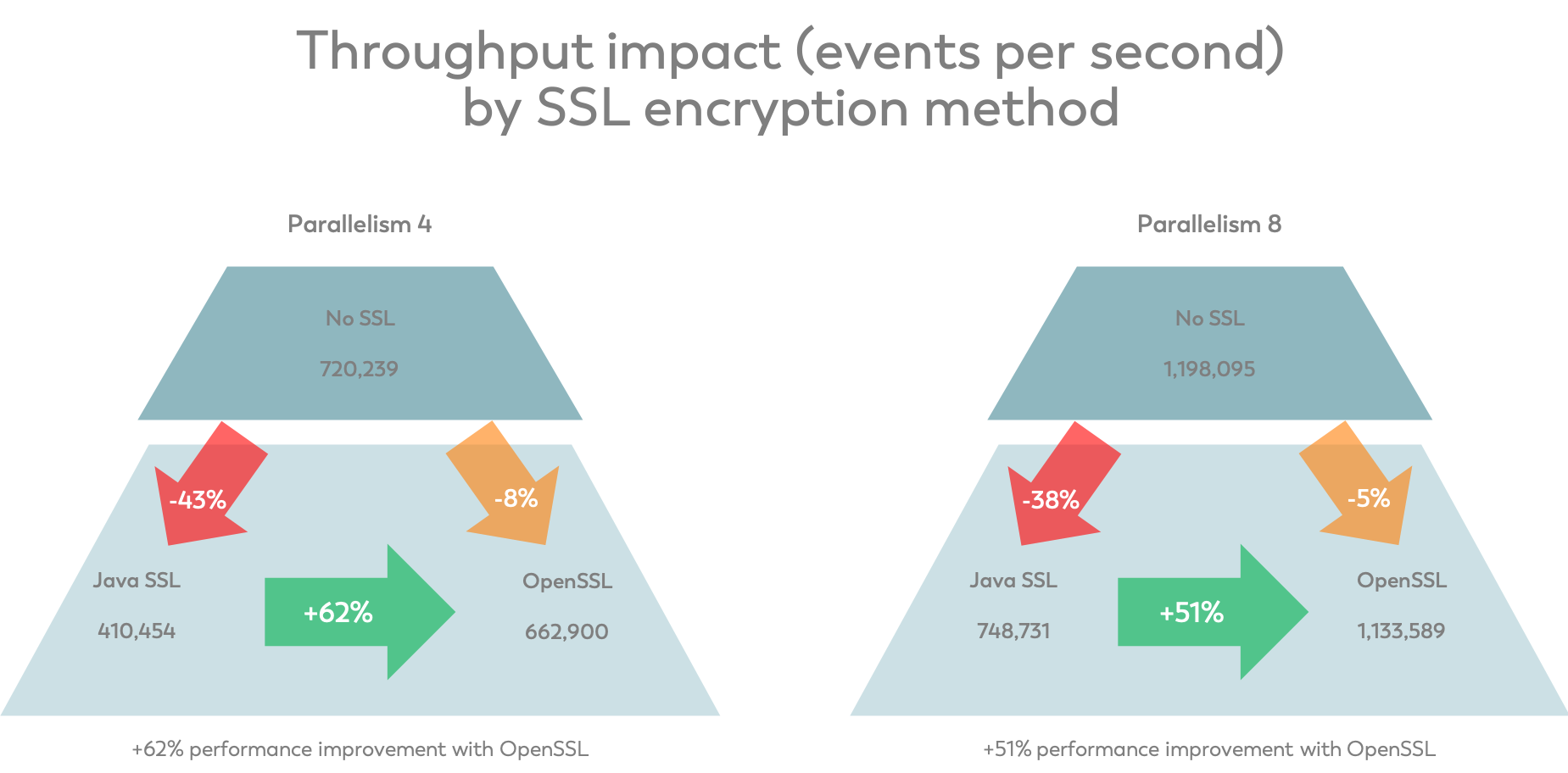

For the benchmark, we use our TroubledStreamingJobSolution43 and measure the throughput in numRecordsOutPerSecond for the source task and provide the average throughput in the last 10 minutes of a 15-minute long benchmark run. During this period, all benchmark runs showed rather stable results. As you can see, enabling Java-based SSL decreases the overall throughput by around 40% while using OpenSSL only costs 5-15% of the performance. Compared to Java-SSL, OpenSSL is thus up to 60% faster.

Complex SQL Query

The troubled streaming job from above only has one network shuffle in its job graph which is exemplary for simple Flink jobs but does not cover more complex scenarios. Our second scenario is inspired by a real streaming SQL job we were given and joins an input stream from fact_table with a few dimensional tables to enrich incoming records. The query outlined below consists of 5 temporal table joins on randomly generated data (Strings of up to 100 characters each) in processing time (for simplicity):

SELECT

D1.col1 AS A,

D1.col2 AS B,

D1.col3 AS C,

D1.col4 AS D,

D1.col5 AS E,

D2.col1 AS F,

D2.col2 AS G,

D2.col3 AS H,

...

D5.col4 AS X,

D5.col5 AS Y

FROM

fact_table,

LATERAL TABLE (dimension_table1(f_proctime)) AS D1,

LATERAL TABLE (dimension_table2(f_proctime)) AS D2,

LATERAL TABLE (dimension_table3(f_proctime)) AS D3,

LATERAL TABLE (dimension_table4(f_proctime)) AS D4,

LATERAL TABLE (dimension_table5(f_proctime)) AS D5

WHERE

fact_table.dim1 = D1.id

AND fact_table.dim2 = D2.id

AND fact_table.dim3 = D3.id

AND fact_table.dim4 = D4.id

AND fact_table.dim5 = D5.id

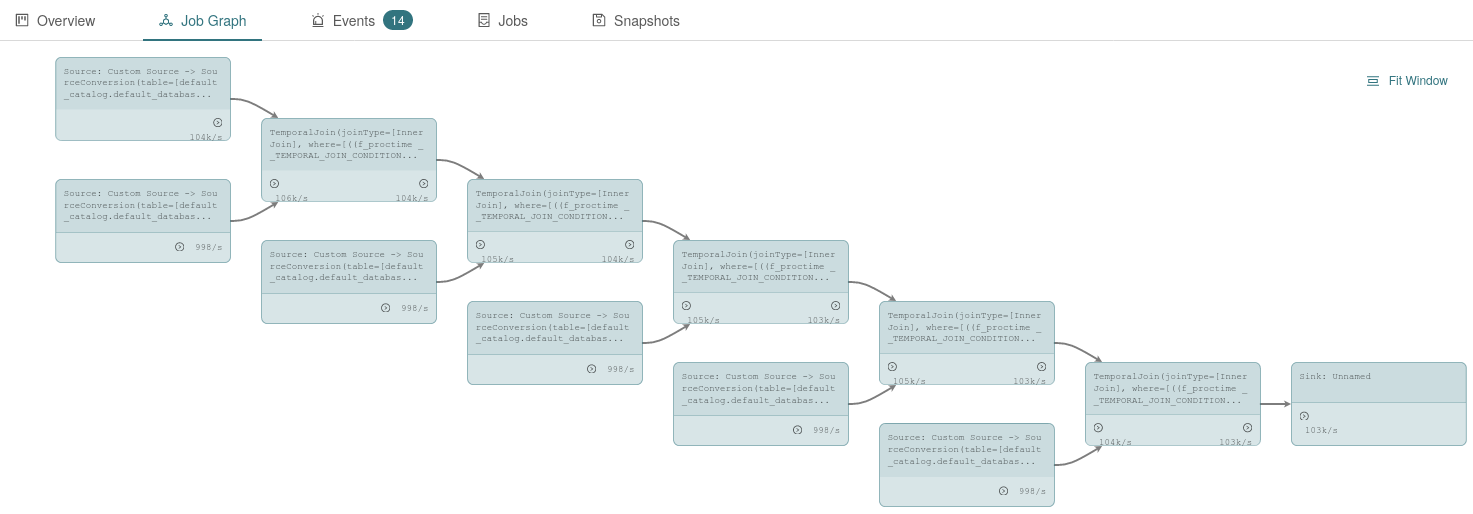

Flink’s Blink planner transforms this to the following job graph and, for the benchmark, we limit each dimensional data input stream to 1,000 events per second, leaving the fact stream unlimited. Eventually, the result of the query is written to a DiscardingSink like above.

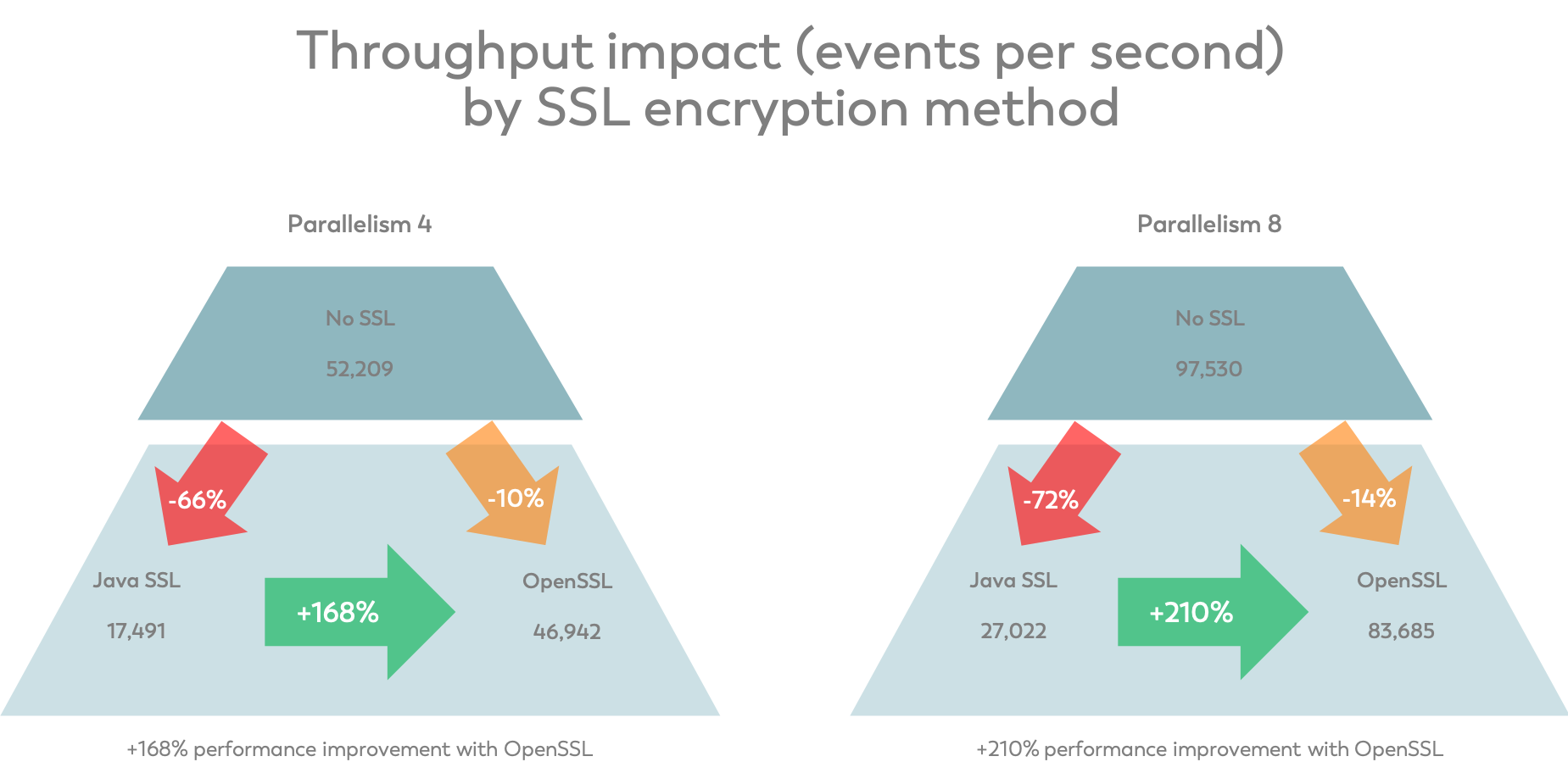

While the String serialization and deserialization certainly takes a lot of the performance in this scenario, there is also some overhead by the network communication alone. It also takes a bit longer to get into a stable state and we will show the average throughput, i.e. numRecordsOutPerSecond, for all sources combined for the last 5 minutes of a 15-minute-long benchmark run. Compared to the Troubled Streaming Job above, this job has a few more network shuffles and therefore, the effect of enabling SSL is larger than before. Using Java-based SSL costs up to 70% of the throughput while enabling OpenSSL reduces the number of processed records by only 10-15%. This makes your Flink job 3X as fast, just by optimizing the SSL implementation!

Conclusion

As you can see from the results above, the improvements you can get from using OpenSSL for encrypted communication channels can be quite significant with up to 3x the performance that Java SSL can achieve. At the same time, enabling OpenSSL encryption does not require any changes to either your Flink application’s implementation or its configuration. All you need is just Ververica Platform 2.1 with Flink 1.9¹ or 1.10 while SSL is enabled. If needed, or for your own benchmarks, you can switch back to the Java-based SSL implementation setting security.ssl.provider: JDK. We are curious about the changes you observe in your jobs. Have a go, see for yourself, and report back to help spread the word.

¹ For backwards compatibility with your existing deployments and (older) Flink docker images they may run on, deployments on Flink 1.9 require setting the Flink configuration security.ssl.provider: OPENSSL explicitly to enable OpenSSL encryption. Please find details in the Ververica Platform documentation.

Appendix

-

Technical Details

Support for running Apache Flink with OpenSSL is implemented using netty-tcnative, Netty’s fork of Tomcat Native. It works with OpenSSL (>= 1.0.2) and must be on the classpath in order for Flink to use it. Netty-tcnative comes in a few flavors of the provided jars:

-

dynamically-linked to libapr-1 and OpenSSL (using your system’s libraries)

-

statically-linked libraries against OpenSSL or boringssl (Google’s fork of OpenSSL); both bundle the libraries inside the jar

Configuration is done via security.ssl.provider; please see the Flink docs for details on how to use it without our Flink images.

- Why is OpenSSL encryption not provided as a default in Apache Flink?

All of the mentioned flavors of providing netty-tcnative have their flaws:

-

Statically-linked binaries are the easiest approach to getting started, however, Apache Flink cannot ship these files due to conflicts between the Apache License and the dual OpenSSL and SSLeay license from OpenSSL 1.x.

-

A dynamically-linked netty-tcnative does not suffer from this conflict but needs to be build specifically for the system (libraries) you want to deploy to; the default setup may or may not work on your system.

You may also like

Meet the Flink Forward Program Committee: A Q&A with Lorenzo Affetti

Meet Lorenzo Affetti, a Program Committee member for Flink Forward 2025, ...

Outrun Fraudsters with Agentic AI and Ververica

Enhance fraud detection with agentic AI and Ververica's real-time stream ...

KartShoppe: Real-Time Feature Engineering With Ververica

Discover how KartShoppe leverages Ververica’s real-time feature engineeri...

Maximize Efficiency: How Ververica's BYOC Deployment Optimizes CAPEX and OPEX

Learn how Ververica's BYOC deployment leverages CAPEX and OPEX to optimiz...