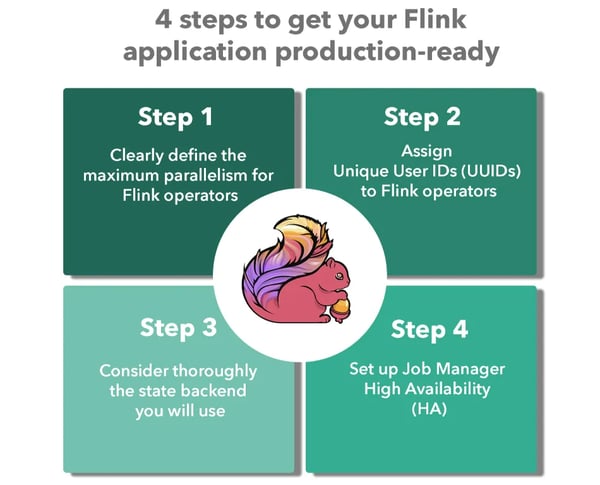

4 steps to get your Flink application ready for production

This post explains the necessary configuration steps that will get your Flink application ready for production. In the following sections, we give an overview of important configuration parameters that Engineering Leads, DevOps and Data Engineers need to consider carefully before bringing a Flink job to the production phase. Apache Flink comes with out-of-the-box defaults for most configuration options that in many instances are a great starting point for the POC phase (proof-of-concept) or for exploring the different APIs and abstractions of Flink.

However, bringing a Flink application to production requires additional configurations that will enable scaling and re-scaling your application effectively and make it production-ready and compatible with different system requirements, Flink versions and connectors for future iterations and potential upgrades.

Below, we collect some configuration points to review before moving your Flink application to production:

1. Clearly define the maximum parallelism for Flink operators

Flink’s keyed state is organized in so-called key groups which are then distributed to the parallel instances of your Flink operators. This is the smallest atomic unit to distribute and thus also influences the scalability of a Flink application. The number of key groups per operator is chosen only once for each job: either manually or by default. The default will give you roughly operatorParallelism * 1.5 with a lower bound of 128 and an upper bound of 32768. It may be defined manually per-job and/or per-operator via setMaxParallelism(int maxParallelism).

Any Flink job that moves into production should specify the maximum parallelism. The decision for this value should go through careful consideration, though, because at the moment, once a maximum parallelism is set it cannot be updated at a later stage. A Flink job which changes the max parallelism can only re-start from scratch with a brand new state. Restoring from a previous checkpoint or savepoint while changing the maximum parallelism is currently unavailable.

It is recommended that the maximum parallelism is set in a way that is high enough for the application's future needs of scalability and availability, while at the same time is relatively low to avoid impacting the application's overall performance. This is due to the fact that with high maximum parallelism, Flink maintains certain metadata for its ability to rescale which can increase the overall state size of your Flink application.

The Flink documentation provides additional information and guidance on how to use checkpoints to configure applications that use large state.

2. Assign Unique User IDs (UUIDs) to Flink operators

For stateful Flink applications, it is recommended to assign unique user IDs (UUIDs) to all operators. This is necessary because some of built-in Flink operators (like windows) are stateful while other can be stateless, which makes it hard to know which built-in operators are actually stateful and which are not.

Flink operator UUIDs can be assigned using the uid(String uid) method. The operator UUIDs allow Apache Flink to effectively map operator state from a savepoint to the appropriate operators, an essential element for savepoints to work properly in a Flink application.

3. Consider thoroughly the state backend of your Flink application

Developers and Engineering Leads should carefully consider the type of state backend for their Flink application before moving to production due to the fact that Apache Flink doesn’t support state backends interoperability at the moment. This makes it necessary to restore the state from a savepoint for the same state backend that took the savepoint in the first place.

Read more about the differences between the 3 types of state backends currently supported in Apache Flink in one of our earlier blog posts.

For production use cases, it is highly recommended to use the RocksDB state backend since this is currently the only type of state backend to support large state and asynchronous operations (like snapshots) that allow writing snapshots without stopping Flink’s operations. On the flip side, there might be performance tradeoffs by using the RocksDB state backend since all state accesses and retrievals require serialization (and deserialization) to cross the JNI boundary that might impact an application’s throughput compared with the in-memory state backends.

4. Set up Job Manager High Availability (HA)

High Availability (HA) configuration ensures that potential failures of the JobManager component in a Flink application recover automatically and thus eliminate any downtime to the bare minimum. The JobManager’s primary responsibility is the coordination of all Flink deployments, such as scheduling and appropriate resource allocation.

By default, Flink sets up one JobManager instance per Flink cluster. This creates a single point of failure (SPOF): if the JobManager crashes, no new programs can be submitted, and running programs fail. It is therefore highly recommended that High Availability (HA) is configured for production use cases.

data Artisans Platform provides a production-ready stream processing infrastructure that includes open source Apache Flink and makes your application production-ready without additional manual configurations or deployments. We encourage you to download the Ververica Platform Kubernetes trial to benefit from 10 non-production CPU cores and test the functionality of the product for 30 days!

The above 4 steps follow best practices set by the community that allow Flink applications to scale arbitrarily while maintaining state, handle bigger volumes of data streams and state sizes, and increase their availability guarantees - specific requirements for production use cases. The Deployment & Operations section in Apache Flink’s documentation provides additional guidance and support for stable Flink operations. We highly recommend following the above steps and reading the documentation carefully before moving your application to production.

You may also like

Meet the Flink Forward Program Committee: A Q&A with Lorenzo Affetti

Meet Lorenzo Affetti, a Program Committee member for Flink Forward 2025, ...

Outrun Fraudsters with Agentic AI and Ververica

Enhance fraud detection with agentic AI and Ververica's real-time stream ...

KartShoppe: Real-Time Feature Engineering With Ververica

Discover how KartShoppe leverages Ververica’s real-time feature engineeri...

Maximize Efficiency: How Ververica's BYOC Deployment Optimizes CAPEX and OPEX

Learn how Ververica's BYOC deployment leverages CAPEX and OPEX to optimiz...